Artificial Intelligence is the defining topic of our current moment. Some believe it's a "bubble," while others think it’s a drop-in replacement for search engines. I believe it's neither.

But I do believe it is the next "big thing."

A look back on other "big things"

Technological paradigms are constantly shifting. The "hype" cycle surrounding new technology has existed for a really long time.

I grew up as the internet was becoming mainstream. Websites were popping up everywhere; it was the hot topic. I’m certain there was a good few back then who thought the internet was a bubble that would never replace traditional media. That didn't age well.

I also remember when mobile phones began to saturate the market. This one is tricky, as phones had existed in various forms for a while. But when the smartphone arrived, it changed everything - first for the tech-savvys, and eventually for everyone.

Then came social media, streaming platforms, and so on.

We are always looking for the next big thing; it's in our nature. And sooner or later, those big things usually do come around.

Why AI?

Like most previous paradigm shifts, AI is not technically new. It has existed for decades, but only recently has it become accessible to the masses. Sound familiar?

However, AI has grown in availability and usability at an unprecedented rate. ChatGPT was officially released in late 2022; I'm writing this in late 2025. In just three years, it jumped from a conversational bot to a full-fledged multimodal agentic system capable of complex execution.

This rate of growth is unusual, to say the least. Mobile phones and internet took their sweet time to reach that "mainstream" status. AI is already there. It has been there for a while, and that, while seemingly exciting, is also terrifying.

The elephant in the room

AI is here to stay. It's not going away, and it's not a bubble. It's the next big thing.

And this is, in layman's terms, really bad.

AI is here to stay. It's not going away, and it's not a bubble. It's the next big thing.

And this is, in layman's terms, really bad.

To understand why this is bad, let's take a look at the impact of each previous big thing, and yes, order matters.

Telephones made communication easier, faster, and more accessible. While this sounds dreamy, it also reduced physical proximity. It wasn't horrible, but it was a start.

The Internet made information accessible to everyone. It shrunk the world. However, it made all information accessible to everyone. One could argue this wasn't a huge problem when the user base was primarily adults and professionals.

Smartphones made the internet universally accessible. Suddenly, everyone could get online, anytime, anywhere. Access no longer required a public library or a home computer. This is where things became complicated.

Social Media was the cherry on top. We didn't just have easily accessible information; we had platforms built on the premise of constant engagement. Accessing information was no longer a passive act.

This trend of compounding impacts should give you an idea of what I'm hinting at. I believe that every new "big thing" is more dangerous than the one before it. Why? Simply because every new technology is the accumulation of all previous ones: their good, their bad, and their ugly.

Is it over?

We are tripling our carbon footprint, consuming water at alarming rates, and building massive data centers, all while making it more difficult for the average user to keep up with hardware demands. Yet, we are not entirely doomed.

Don't get me wrong, I don't hate AI. In fact, I was an early supporter. I used to be fascinated by early NLP models; Siri, Alexa, Cortana, Google Now, you name it. I loved the idea of talking to a machine and having it understand. This fascination is also probably the reason behind my interest in video games. Later, I dove into the theory of machine learning and deep learning, following new architectures as they emerged, but this was all just theory. Then came LLMs.

I am torn on Large Language Models (LLMs). I love them; I think they're incredible tools for routine work and brainstorming. But a little too much of a good thing can be bad. LLMs should never be a replacement for you.

Your brain is the most powerful system to ever exist. It's the definition of general intelligence. It's what any AI is trying to mimic. But it simply can't. Not yet, and likely never.

They're called "co-pilots" for a reason. We shouldn't let them replace education, critical thinking, and creativity. Because if we do, we'll be effectively devolving as humans.

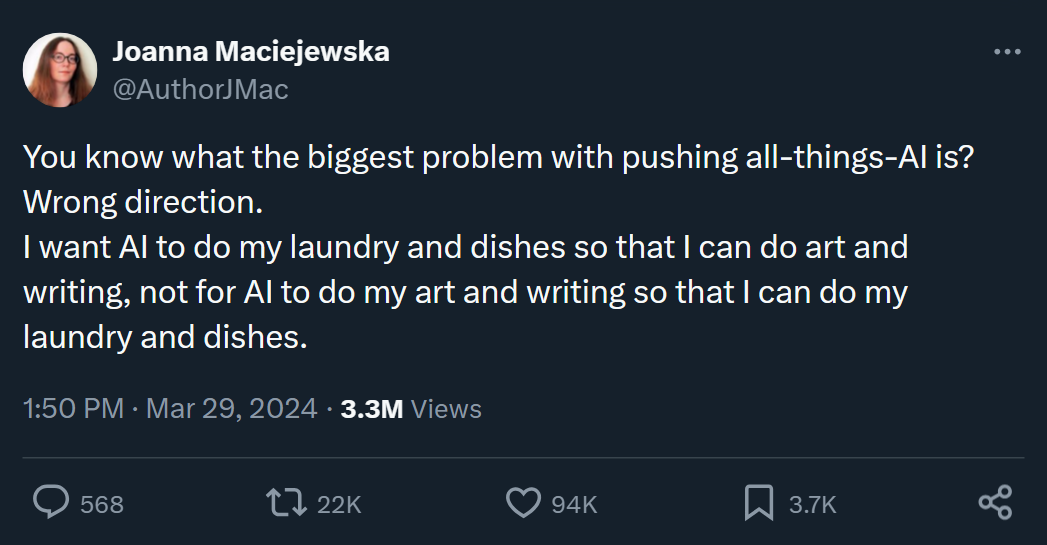

One of my all time favorite quotes that pretty much sums all this up is author @AuthorJMac's tweet: